Finding Names of Characters in Text using Python and Machine Learning

Published

One of my side project involves the generation of storylines based on the Hero’s Journey Pattern so I’m looking at ways to automate the extraction of data of interest from novels.

The first focus is to extract the names of all characters, which will then open the way to find other pieces of information and I’ll briefly lay out 3 ways to get the names data.

Using NLTK

The first way is to use the Natural Language Toolkit, aka NLTK. NLTK is a well-known open-source toolkit used to work with textual data. It’s easy to use and quite fast and we can see below.

>>> import nltk

>>> sentence = """At eight o'clock on Thursday morning

... Arthur didn't feel very good."""

>>> tokens = nltk.word_tokenize(sentence)

>>> tokens

['At', 'eight', "o'clock", 'on', 'Thursday', 'morning',

'Arthur', 'did', "n't", 'feel', 'very', 'good', '.']

>>> tagged = nltk.pos_tag(tokens)

>>> tagged[0:6]

[('At', 'IN'), ('eight', 'CD'), ("o'clock", 'JJ'), ('on', 'IN'),

('Thursday', 'NNP'), ('morning', 'NN')]it’s main issue though is that it is not exactly accurate as is. The above is an example taken from the home page and there is something quite glaring: the token “NNP” is what tags a word as a proper noun, a name. The problem is that “Thursday” is tagged the same way as John; they are both tagged as “NNP”. What’s more, NLTK can only tag single words.

There is quite a bit of data cleaning to perform, and you can refer to this article to get started.

Spacy

Overall, the accuracy of NLTK leaves a lot to be desired, so we’ll move on to our next candidate package: Spacy.

Spacy uses a Machine Learning model to tag text elements, which means the model is much slower and uses a lot more memory and CPU than NLTK, but it’s also a lot better in terms of accuracy.

Besides its accuracy, Spacy is also great since it provides additional information on tokens (such as its position, or the lemma of verbs). It can also handle tokens of variable lengths and can categorize tokens; for example, persons and organisations are considered differently since the model has been trained on real-world data, but this can be a bit problematic when using texts from novels (since organization names are likely to be previously unseen by the model), so there’s obviously still need for additional checks by a human and post processing of data.

Overall, it’s really great and they propose different models (bigger and better) if needed. The only downside is that the models use a lot more memory and you’ll need tensorflow so that means higher cost to run it on a dedicated server compared to NLTK.

BERT & HuggingFace

If you’ve never heard of it, HuggingFace provides pretrained NLP models for free. This means you can have access to State of the Art models such as BERT.

These models have seen a heavy focus on Transfer Learning (which means the model has learned to perform different tasks), so the HuggingFace pipeline allows you to specify the tasks for which you want to use the model, be it sentiment analysis, named entity recognition, or questions answering.

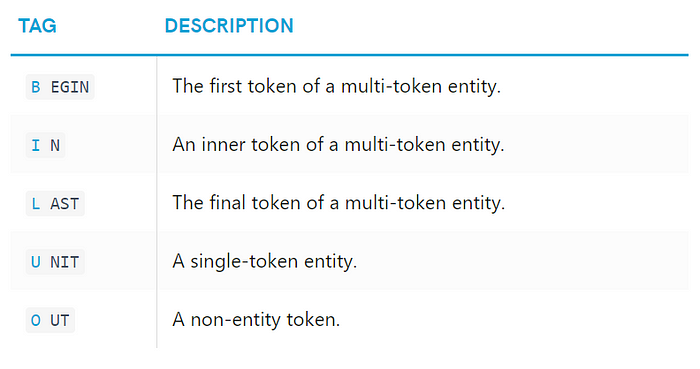

The named entity recognition (NER) is extremely nice and also implements the BILUO tagging scheme so you get a lot of information about your tokens, which makes post processing of data much easier.

I found this extremely interesting since you can also use BERT as a question answering model: write down a question and give the text data as context and BERT will try to answer it.

This is possible once you understand how the model works: BERT has been trained by “masking” (aka removing) part of sentences and the model had to try to guess what was the data removed. Because of this training, the question-answering pipeline will check your question and output a mask containing data from your context data. For example, with the “Arthur” example given in the NLTK section, the question “Who was not feeling well?” the answer would be a dict with the text “Arthur”, the start position of the “Arthur” text in the context data and the end position.

The question answering can therefore only output data which is contained in the context input, so this has its limits, but I think it can be an extremely powerful tool in a pipeline for automated analysis of textual data.

Anyways, that’s all for now. These are interesting approaches which still need some additional work afterwards, but they are already great starts to applied NLP.